Justice-focused organizations sit on a gold mine of restorative justice program data, but most of it never connects cleanly to what happens in court. Case notes, conference outcomes, and victim feedback sit in one system. Charges, dispositions, and reoffending sit somewhere else, often behind a portal that nobody has time to scrape.

For executive directors, COOs, CFOs, and operations or technology leaders, integrating restorative justice program data with legal outcomes is no longer a tech hobby. It is a strategy question. It shapes how you answer funders, regulators, and boards when they ask, “What actually changes when people go through your program?” This post stays practical and walks through a simple path: what to track, how to connect it, and how to govern it safely.

Illustration of restorative justice participants connected to court outcomes through data links. Image created with AI.

Why Integrating Restorative Justice Program Data with Legal Outcomes Matters for Your Organization

Integrating restorative justice program data with legal outcomes is not about chasing a data fad. It is about proving that your work changes what courts and systems actually do with people.

Restorative justice (RJ) programs capture a rich picture of each case: who was harmed, who caused the harm, what was agreed to, and whether participants felt heard and safe. Legal outcomes data covers the system side of the story: charges, dispositions, sentencing, supervision terms, custody days, and later reoffending.

Right now, most organizations hold these stories in scattered tools. Program notes live in one case system, referral details in email, and outcome data in court portals that staff pull by hand. When funders or legislators ask for more than recidivism, your team scrambles to stitch together spreadsheets the night before a board packet is due.

The research is already on your side. Meta-analyses like the Department of Justice’s review of juvenile restorative justice programs show lower reoffending and higher victim satisfaction when RJ is used instead of traditional sanctions, with consistent patterns across sites and program types, as summarized in the federal meta-analysis on restorative justice principles in juvenile justice. More recent work, such as the Make-it-Right program study from the National Bureau of Economic Research, finds sizable drops in new arrests and custody when youth complete RJ conferencing, as shown in the NBER analysis of restorative justice conferencing and recidivism.

The problem is not lack of evidence in the field. The problem is whether your organization can show that kind of evidence from your own data, at your own sites, in a way your board and partners can trust.

From scattered case stories to proof your board and funders can trust

Most leaders can tell powerful stories about a single case. A young person avoids a felony, repairs harm with the person they injured, finishes school, and never comes back into the system.

Those stories matter. They also hit a ceiling when a funder asks, “Is this pattern or luck?”

Integrating RJ program data with legal outcomes lets you move from scattered anecdotes to structured proof. Instead of a pile of PDFs and ad hoc exports, you get:

- Cohort views that compare RJ participants with similar non-RJ cases

- Trend lines that show custody days, charge reductions, or dismissals over several years

- Side-by-side views of outcomes by site, partner court, or referral source

Now your grant renewals and board packets do not depend on last-minute spreadsheet marathons. Reporting turns into a predictable process, not a fire drill. Over time, that same integration also exposes your recurring data and reporting problems so you can address them as part of a broader modernization plan, not one grant at a time.

Using integrated data to shape programs, partners, and policy

Once RJ and legal outcomes data are linked, you can ask sharper questions that change real decisions:

- Which referral sources send participants who complete RJ and stay out of court the longest?

- Which harms or charge types show the largest drop in new offenses after RJ?

- How do outcomes look for youth, immigrants, or people coming out of custody, compared with others?

Answers to these questions guide program choices, not just reports. You decide who to prioritize, what supports to add, and where to push for better referrals.

Integrated data also changes how you show up with partners. You can walk into meetings with prosecutors, judges, or probation officers and say, “Here is what happens to your cases when they go through RJ, compared to your docket overall.” For policy advocacy, you can pair community stories with clear charts when talking about diversion statutes or funding.

This is not theory. Justice reinvestment and reintegration efforts that join program data with system metrics have already shown fewer new charges and shorter custody spells. You are applying the same logic to your RJ work, tailored to your people and your partnerships.

What Data You Actually Need to Connect Restorative Justice Programs to Legal Outcomes

You do not need a perfect academic dataset to get started. You need a small, consistent set of fields that your staff can fill in every time.

Keep the goal simple: capture RJ program activity in a clear way, then link a short list of legal outcomes to each case.

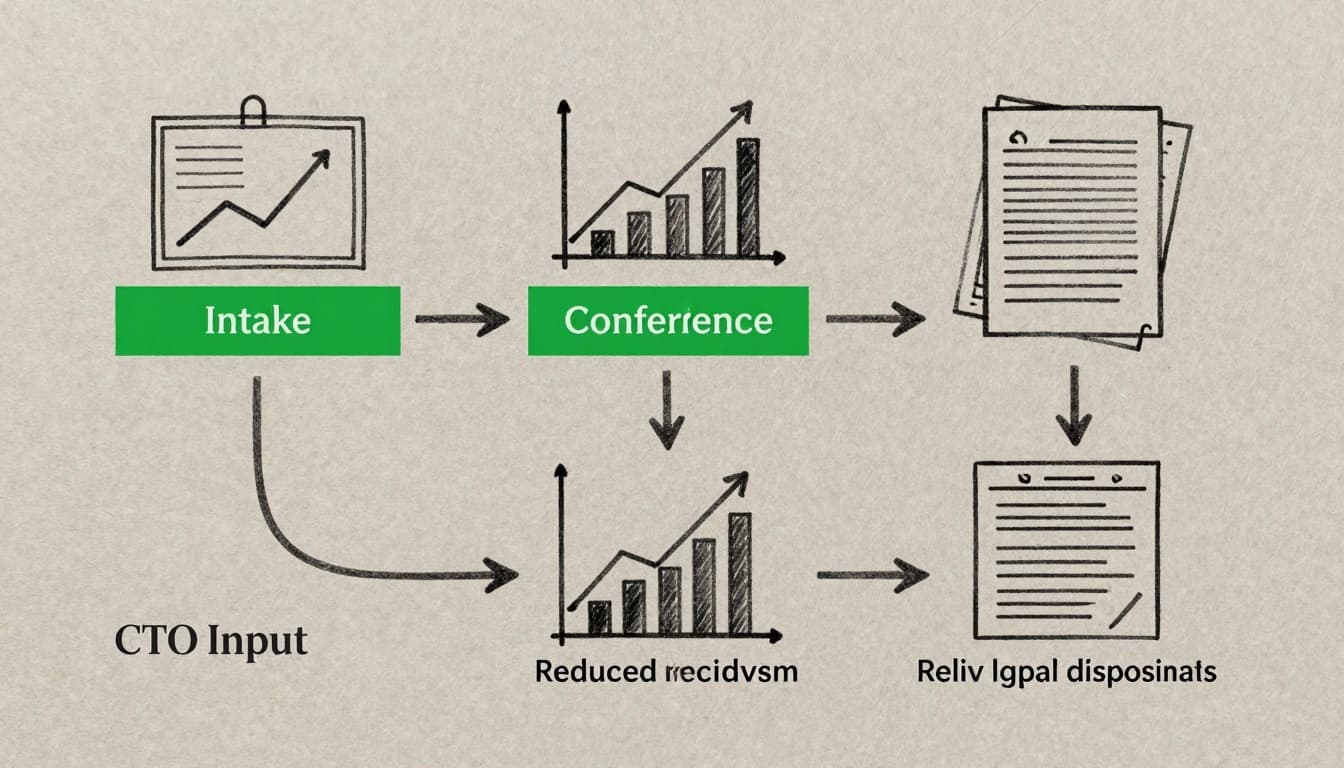

Illustration of restorative justice steps flowing into measurable legal outcomes. Image generated by AI.

Core restorative justice program data to track in a consistent way

Every RJ program, no matter the size, should track at least:

- A unique participant ID or safe key (not a Social Security number)

- Referral source (court, prosecutor, school, community partner)

- Harm or charge category (simple, standard groupings are fine)

- Dates and status of key RJ steps (intake, prep, conference, agreement)

- Agreement terms (restitution, repair activities, services, apologies)

- Completion status (completed, partial, not completed, withdrew)

- Simple feedback from victims and participants (satisfaction, sense of safety, sense of accountability)

The important part is not fancy software. It is standard definitions. If “completed” means one thing in one site and something else in another, your data loses power fast.

You can start in a modern case tool or in a well-structured spreadsheet. The key is to pick your fields, define them once, and train staff to use them the same way. If you later invest in broader fractional technology and data leadership support, this consistent core will make every future step faster and cheaper.

Key legal outcomes to align with restorative justice data

On the system side, you also do not need everything. You need a repeatable way to pull the same few fields for each case that touches RJ:

- Case disposition (dismissed, diverted, convicted, other)

- Sentencing details, if any (custody, probation, fines, time served)

- Changes in charges over time (felony to misdemeanor, multiple counts down to one)

- Future court appearances or violations tied to the same person or case

- Custody days linked to the case

- New offenses or violations in a clear follow-up window

A “follow-up window” is just a shared clock. For example, you might agree to look at new charges in the 12 months after the case closes, or 24 months for more serious harms. The point is that everyone counts the same way, every time.

Treat privacy and confidentiality as design rules from day one. Decide what you truly need, then match records in a way that protects people from avoidable exposure.

A Practical Roadmap for Integrating Restorative Justice Program Data with Legal Outcomes

The work sounds big. It does not have to start that way. You can treat this as a sequence of small, controlled moves that reduce risk and prove value at each step.

Illustration of a three-step roadmap from data map to secure integration and dashboards. Image created with AI.

Start with a simple data map and one or two high-value questions

Begin with a whiteboard, not a tool. Map where RJ data lives today, such as:

- Case management systems

- Shared drives or spreadsheets

- Staff notes or surveys

Then note where legal outcomes live:

- Court or prosecutor portals

- Probation or supervision systems

- Corrections or jail data

Now pick one or two priority questions that matter to leadership today. For example:

- “How often do RJ participants have new charges within 12 months, compared with similar non-RJ cases?”

- “How many custody days do we avoid when a case goes to RJ instead of traditional court?”

A tight question set keeps the project from turning into a giant, unfocused warehouse idea. This is also where a partner who understands how you structure technology and data engagements can help you draw a boundary around scope before anyone starts moving fields around.

Design safe, lightweight data sharing and matching

Next, decide how to connect RJ records with legal data in a safe way. Common options include:

- Shared case numbers that both your program and the court already use

- Safe hashed IDs created from existing identifiers, managed by a trusted partner

- Controlled lookup tables stored in a secure location, with access limited to a very small group

Put simple guardrails in writing. Spell out who can see what, for what reason, and for how long. Make sure RJ case notes and confidential conversations stay on the program side, not in shared files.

Many organizations start with manual exports and secure file transfer. Only after they see real value in quarterly reports do they consider more automated feeds. That step-by-step pattern keeps risk low while you learn what is actually useful.

Build dashboards and feedback loops that staff will actually use

Once you have linked data, resist the urge to build a giant dashboard wall. A strong first version often fits on one or two screens and shows:

- RJ referrals and completions over time

- Completion rates by site or referral source

- A small set of linked legal outcomes, such as dismissals, custody days, or new charges, by cohort

Then set a simple review rhythm. Many organizations start with a quarterly learning session that brings together program, legal, and data staff. The goal is not to admire charts. The goal is to ask, “What do we need to change next quarter?” and commit to one or two small adjustments.

Keep front-line staff and community partners in mind. Ask what they need to see to feel that the data reflects their work and their people. Over time, these sessions also feed into your long term data and digital risk insights, so you are not guessing about where to invest next.

Conclusion

Integrating restorative justice program data with legal outcomes is not about buzzwords or buying another platform. It is about building a clear, safe, modest data backbone that shows how your programs change legal paths and community safety in real terms.

For you as an executive, the payoff is direct: calmer reporting cycles, stronger trust with courts and partners, safer handling of sensitive stories, and a clearer case for funding and policy shifts. You get to replace last-minute spreadsheets with a steady flow of evidence your board can stand behind.

You do not need to fix everything this year. Start with one or two questions and a small integration pilot. If your systems feel fragile or scattered, consider pulling in a seasoned, fractional partner and schedule a short strategy call to map the first few steps. The work is serious, but it is also manageable, and your data is ready to do more for the people you serve.