Artificial intelligence is reshaping how businesses operate, but it also introduces complex ethical and regulatory challenges. Leaders face tough choices when deploying powerful AI tools, making robust ai governance essential for responsible progress.

This guide offers a practical roadmap to navigate the evolving landscape of ai governance. You will discover the core principles, explore ethical frameworks, understand compliance requirements, and gain actionable steps for implementation and improvement.

Are you ready to build trust, manage risks, and unlock innovation? Dive in to learn how effective ai governance can drive sustainable success.

Visit https://www.ctoinput.com to learn more and to connect with a member of the CTO Input team.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com.

Understanding AI Governance: Foundations and Frameworks

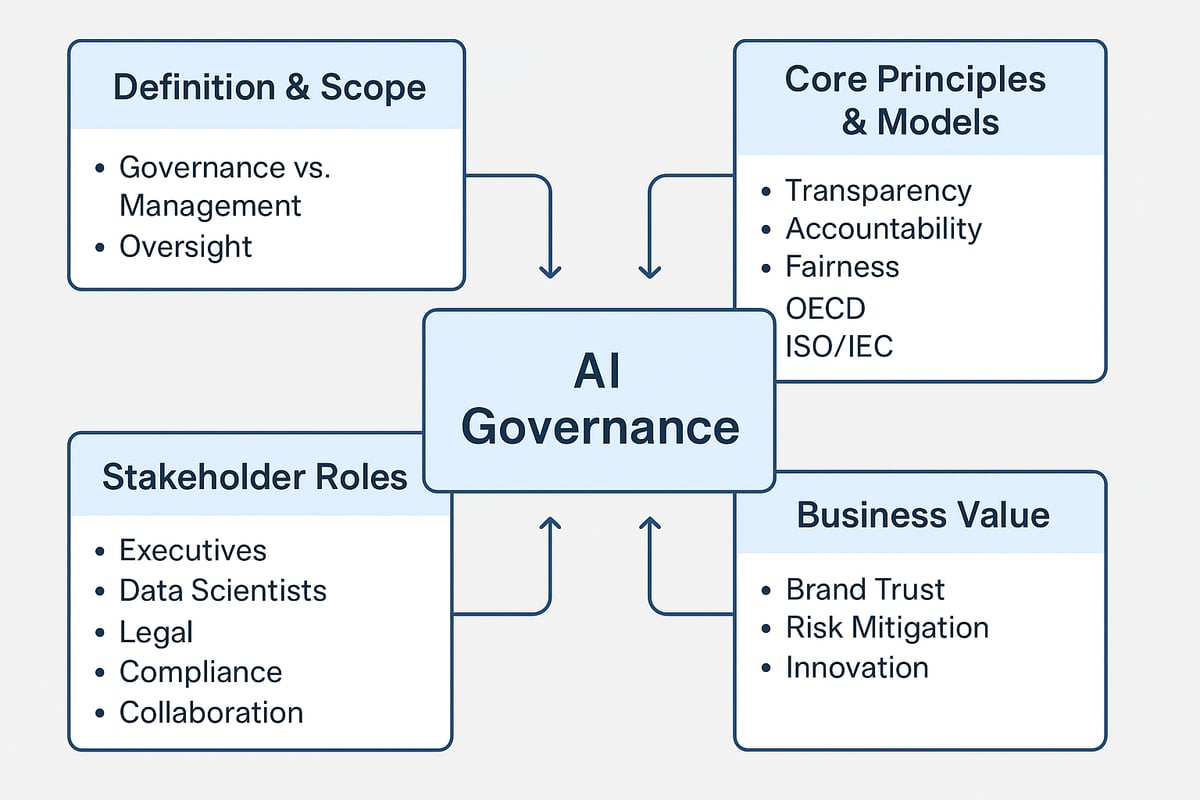

Navigating the world of ai governance begins with a clear understanding of its foundations. As organizations embrace artificial intelligence, setting effective guardrails becomes essential for responsible and innovative deployment. This section explores the key concepts, principles, stakeholders, and business value underpinning ai governance.

Defining AI Governance

Ai governance refers to the structures, processes, and policies that guide the responsible development and deployment of artificial intelligence. Its scope covers not only technical controls but also ethical, legal, and organizational dimensions. Unlike management, which focuses on day-to-day operations, ai governance sets the strategic direction and ensures oversight at every stage.

Effective ai governance provides clarity between who designs, who manages, and who oversees AI systems. This separation is vital for preventing conflicts of interest and ensuring accountability. Without strong governance, organizations risk unintended consequences, regulatory breaches, and erosion of public trust.

Core Principles and Models

At the heart of ai governance are three pillars: transparency, accountability, and fairness. These principles guide organizations to build AI systems that are explainable, unbiased, and just. Leading frameworks, such as those from the OECD and ISO/IEC, offer comprehensive models for embedding these values into practice.

Internal policies lay the groundwork, but external regulations shape the boundaries. Aligning technology initiatives with organizational strategy is crucial for success. For deeper insights, explore frameworks to align technology with strategy, which can help organizations ensure their ai governance supports both compliance and business goals.

Stakeholder Roles and Responsibilities

Successful ai governance depends on the active involvement of diverse stakeholders. Executives set the vision, data scientists build and maintain models, while legal and compliance teams ensure adherence to laws and ethical norms. Cross-functional collaboration is essential, as no single group can manage all aspects of governance alone.

A lack of clear roles can have serious consequences. For example, a financial institution once faced reputational damage when its AI credit-scoring model produced biased results due to insufficient oversight from compliance teams. This highlights the need for defined responsibilities across the organization.

The Business Case for Effective AI Governance

Implementing robust ai governance delivers tangible business benefits. It strengthens brand trust, reduces operational and regulatory risk, and enables innovation within safe boundaries. According to Gartner, 70 percent of organizations cite governance gaps as a primary barrier to AI adoption.

Consider a retailer that suffered significant reputation loss after deploying an AI-driven recommendation engine without adequate oversight. The resulting bias in product suggestions led to public backlash and declining customer loyalty. This case underscores how effective ai governance is not just a compliance exercise, but a driver of sustainable growth.

Visit https://www.ctoinput.com to learn more and to connect with a member of the CTO Input team.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com.

Navigating AI Ethics: Principles, Challenges, and Solutions

Artificial intelligence is rapidly reshaping business, yet ethical considerations remain at the heart of responsible ai governance. As organizations deploy AI at scale, understanding and applying ethical principles is crucial for building systems that are fair, transparent, and trustworthy.

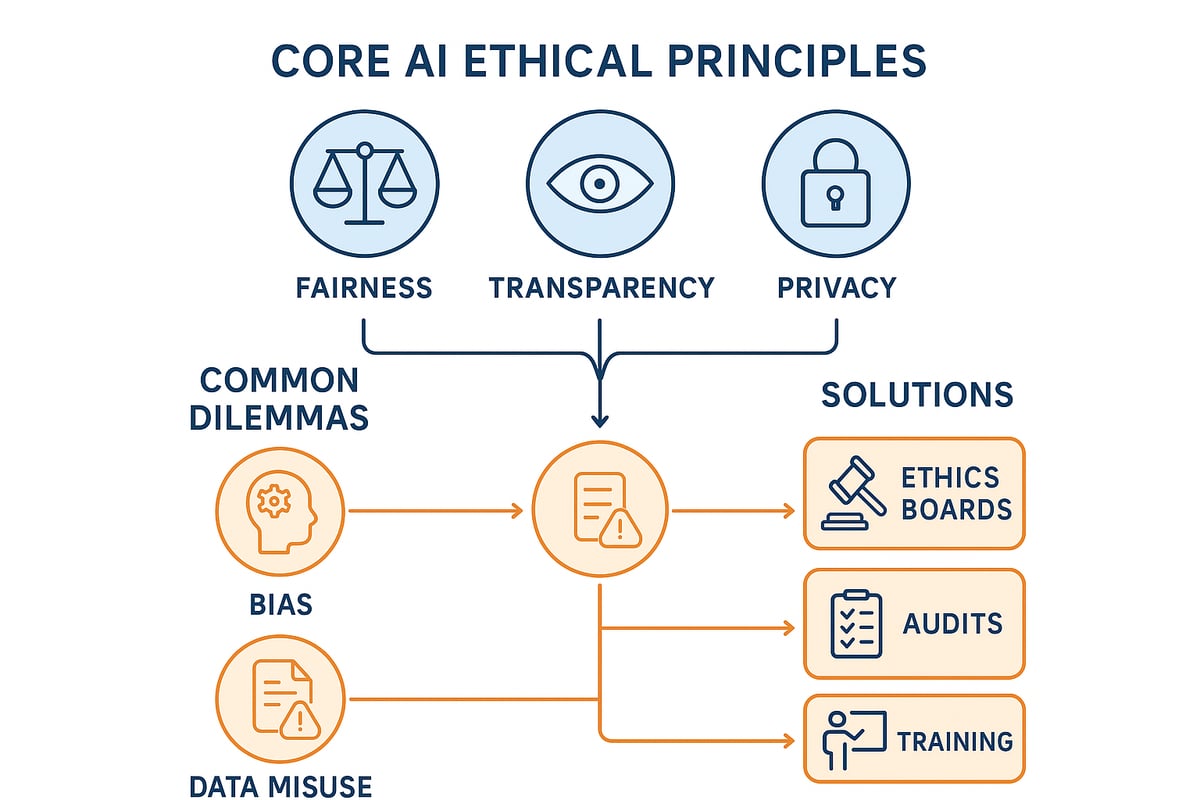

Key Ethical Principles in AI

Effective ai governance begins with a foundation of ethical principles. The most widely recognized include:

- Fairness: Ensuring AI outcomes are impartial and just for all users.

- Non-discrimination and Inclusivity: Proactively preventing bias against any group and designing for diverse populations.

- Privacy and Data Protection: Respecting user privacy, securing personal data, and complying with legal standards.

- Explainability and Transparency: Making AI decisions understandable and open to scrutiny.

Organizations that embed these principles into their ai governance frameworks set the stage for responsible innovation. These principles not only protect individuals but also strengthen organizational credibility and social license to operate.

Common Ethical Dilemmas

Despite best intentions, organizations face significant ethical dilemmas as they scale their ai governance efforts. Common challenges include:

- Bias in Training Data and Algorithms: Historical data can encode social prejudices, leading to unfair outcomes.

- Balancing Innovation with Societal Impact: Rapid deployment may outpace safeguards, risking unintended harm.

- Case Example: A healthcare AI system was found to deny care to certain patients due to flawed data, highlighting the importance of robust ethical oversight.

These dilemmas show why ai governance is not just a technical issue, but a societal one. Addressing them requires vigilance, transparency, and a willingness to adapt.

Strategies for Embedding Ethics in AI

To proactively address ethical risks, organizations should integrate ethics throughout the ai governance lifecycle. Proven strategies include:

- Establishing Ethics Review Boards: Cross-functional teams evaluate AI projects for ethical concerns.

- Integrating Ethical Audits: Regular assessments during development cycles help catch and correct issues early.

- Employee Training and Awareness: Ongoing education ensures teams understand ethical standards and their role in compliance.

For more insights on evolving your approach, explore AI’s Next Frontier: Why Ethics, Governance and Compliance Must Evolve. These strategies make ethical considerations a standard part of every AI initiative, supporting sustainable ai governance.

Measuring and Reporting Ethical Impact

Accountability is central to ai governance, making measurement and transparency essential. Leading practices include:

- AI Ethics Impact Assessments: Evaluate potential risks and benefits before deploying new systems.

- Public Transparency Reports: Share findings and actions with stakeholders to build trust.

- Benchmark: Research shows that companies with public AI ethics statements consistently outperform peers in consumer trust.

Proactive measurement and open reporting signal a commitment to responsible AI, reinforcing trust and supporting long-term success.

Visit https://www.ctoinput.com to learn more and to connect with a member of the CTO Input team.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com.

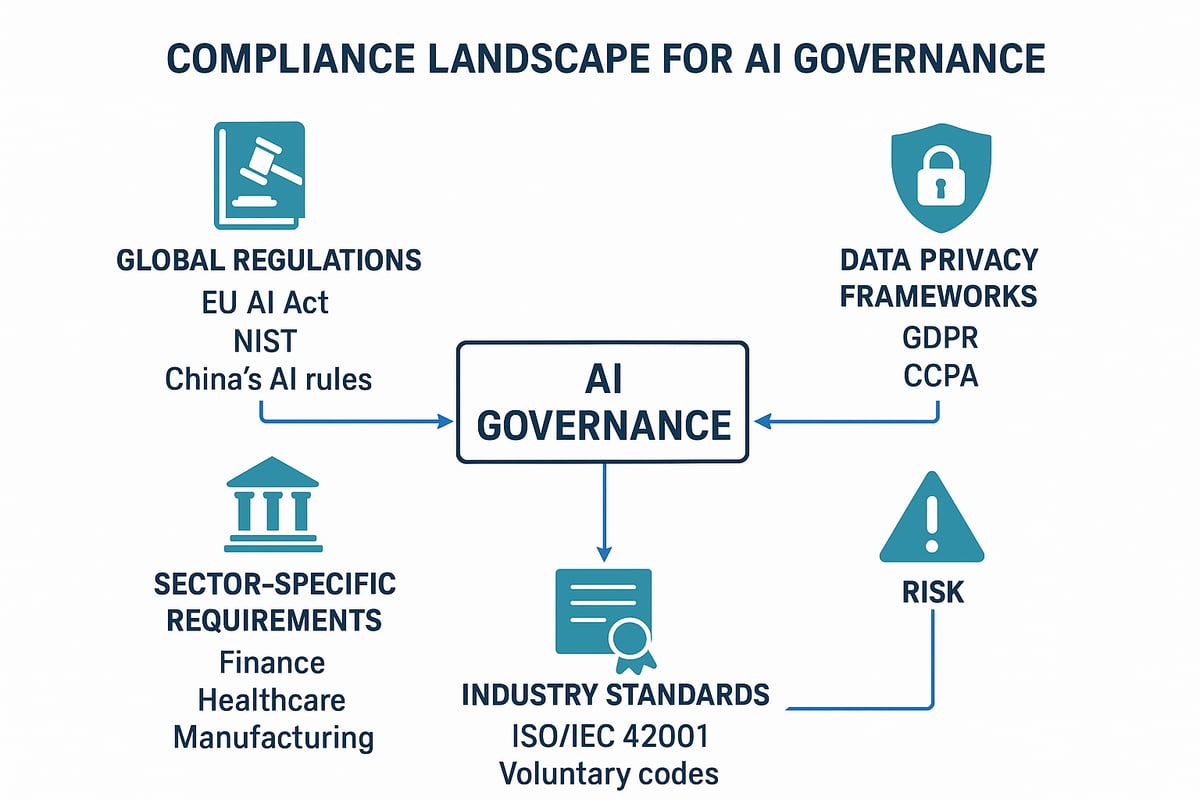

Compliance Landscape: Laws, Regulations, and Industry Standards

Navigating the compliance landscape is crucial for any organization implementing ai governance. As artificial intelligence becomes more embedded across sectors, the rules and standards continue to evolve. Effective ai governance depends on staying ahead of these changes, ensuring responsible deployment and reducing risk.

Overview of Key AI Regulations

Global regulatory trends are rapidly shaping the requirements for ai governance. The European Union’s AI Act, the US NIST AI Risk Management Framework, and China’s rules each set unique expectations for transparency, risk management, and ethical use. Sector-specific regulations in finance, healthcare, and manufacturing further define what is permitted, often requiring rigorous audits and documentation.

Failure to comply can result in severe penalties, as seen in the banking sector where institutions have faced multimillion dollar fines for inadequate oversight. For a deeper dive into how these regulations are shaping the global landscape, see AI Governance in 2025: Navigating Global AI Regulations and Ethical Frameworks. Staying informed is essential for leaders seeking robust ai governance.

Data Privacy and Security Obligations

Data privacy is a cornerstone of ai governance. Regulations like GDPR and CCPA mandate strict controls over how personal data is collected, processed, and stored. Organizations must implement data minimization, obtain clear consent, and anonymize sensitive information within AI systems.

These frameworks require continuous monitoring of data flows and proactive risk assessment. Without strong data governance, AI projects risk violating privacy laws, which can result in significant legal and financial consequences. Embedding privacy by design principles supports compliance and builds trust in ai governance.

Industry Standards and Certifications

Industry standards play a pivotal role in ai governance, offering a blueprint for responsible AI management. The ISO/IEC 42001 standard provides comprehensive guidance for establishing, implementing, and maintaining AI management systems. Voluntary codes of conduct and best practice frameworks offer additional layers of assurance.

By aligning with these standards, organizations demonstrate their commitment to ethical AI and reduce the risk of regulatory breaches. Certifications not only streamline compliance but also enhance reputation, making ai governance a strategic advantage in competitive markets.

Risk of Non-Compliance

The risks of ignoring ai governance are substantial. Legal actions, hefty fines, and reputational harm can follow from non-compliance. For example, the average cost of an AI-related compliance breach now exceeds $4 million, according to IBM.

Beyond the financial impact, organizations may lose the trust of customers, partners, and regulators. Robust ai governance is not just a defensive measure, but a proactive strategy for sustainable growth and innovation.

To learn more about aligning your AI strategy with compliance and risk management, visit https://www.ctoinput.com to connect with a CTO Input expert.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com

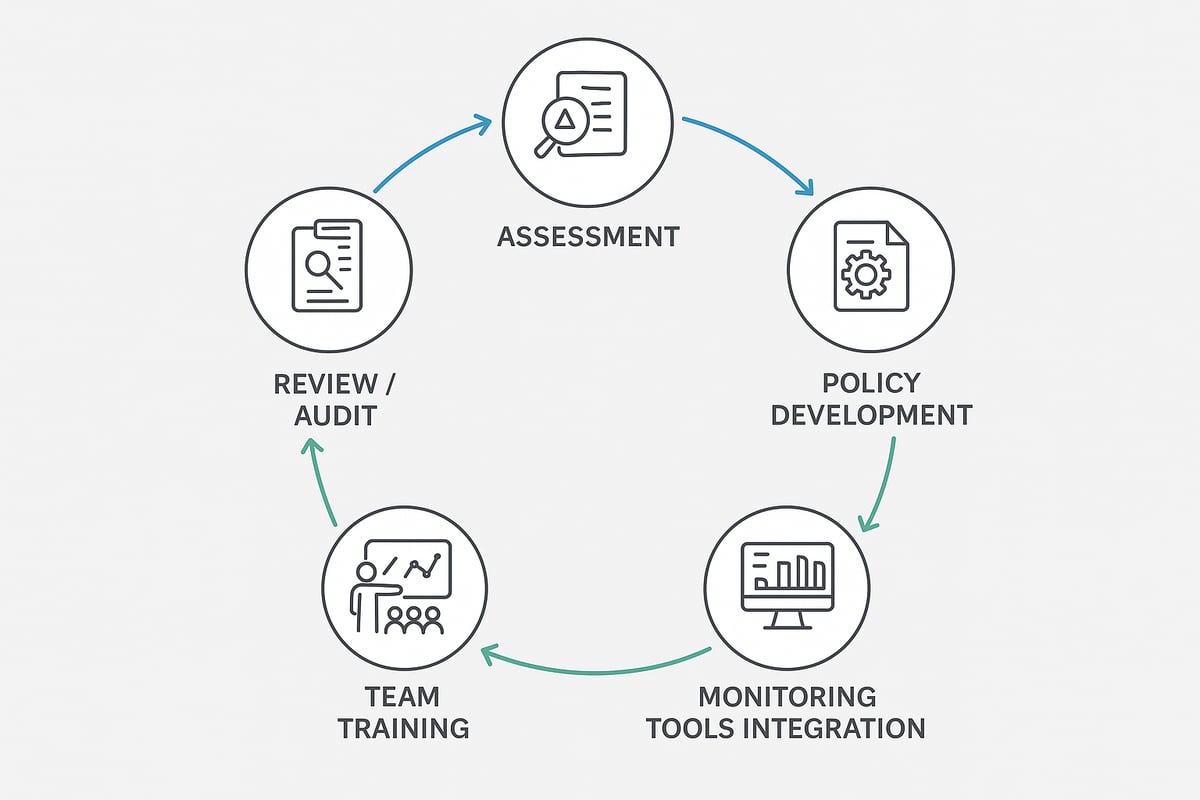

Implementing AI Governance: Steps for Building a Robust Framework

Establishing a strong ai governance framework requires a systematic approach. Each step builds on the last, ensuring your organization can innovate responsibly while managing risk. The following roadmap guides you through the essential phases of implementing ai governance for long-term success.

Step 1: Assess Current AI Capabilities and Risks

Begin by mapping all AI assets and use cases across your organization. Identify which applications are high risk, such as those handling sensitive data, making critical decisions, or impacting customers directly.

Evaluate each system for ethical concerns, potential biases, and compliance requirements. This foundational step in ai governance helps prioritize resources and sets the stage for informed decision-making.

Step 2: Develop Comprehensive Governance Policies

Draft clear, actionable policies that outline expectations for data usage, model development, and deployment. Ensure these policies align with legal, ethical, and industry standards.

Consider referencing AI transformation strategy best practices to ensure your ai governance policies are integrated with broader business goals. Well-crafted policies serve as a blueprint for responsible innovation.

Step 3: Assign Roles and Establish Oversight Structures

Define who is responsible for each aspect of ai governance. Create cross-functional committees with representatives from IT, legal, compliance, and business units.

Establish escalation paths for ethical or compliance issues, ensuring rapid response when concerns arise. Clear roles foster accountability and prevent gaps in oversight.

Step 4: Integrate Tools and Technologies for Monitoring

Leverage AI auditing and explainability tools to track system performance, detect anomalies, and ensure transparency. Implement monitoring dashboards to provide real-time insights into model operations and compliance status.

Regular monitoring is vital to maintaining trust and meeting regulatory obligations in your ai governance framework.

Step 5: Train Teams and Foster a Governance Culture

Offer regular training on ethical AI practices, compliance standards, and organizational policies. Equip teams with the knowledge to identify and address governance challenges proactively.

Encourage open communication and a culture of responsibility, making ai governance a shared organizational value.

Step 6: Review, Audit, and Improve

Schedule periodic internal and external audits to evaluate the effectiveness of your ai governance structure. Use audit findings to update policies and address new risks or regulatory changes.

Continuous improvement ensures your framework remains resilient and adaptable as technology and regulations evolve.

Building robust ai governance is a journey that requires commitment, collaboration, and ongoing refinement. Visit https://www.ctoinput.com to learn more and to connect with a member of the CTO Input team.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com.

Continuous Improvement: Monitoring, Auditing, and Adapting AI Governance

Continuous improvement is vital for any effective ai governance program. As AI systems evolve, so do the risks, regulatory requirements, and stakeholder expectations. Organizations must build agile processes to monitor, audit, and adapt their ai governance frameworks, ensuring ongoing compliance and ethical performance.

Establishing Ongoing Monitoring Processes

Real-time monitoring is the foundation of proactive ai governance. Organizations should implement automated tools that track AI system performance, data flows, and compliance metrics. These tools can provide instant alerts if anomalies, policy violations, or security threats are detected.

A robust monitoring setup enables early identification of issues, reducing the risk of AI models drifting from intended outcomes. Teams can also use dashboards to visualize trends, making it easier to spot potential governance gaps before they escalate.

Conducting Regular Audits and Reviews

Regular audits are essential for maintaining the integrity of ai governance structures. Both internal and external audits help ensure policies are followed, data is protected, and ethical standards are met. Internal reviews allow organizations to catch issues early, while external audits provide independent validation.

One common challenge is tool sprawl, where teams use too many disconnected solutions, complicating oversight. For insights on addressing this, see Tool sprawl as a governance issue. Standardizing tools and audit processes streamlines reviews and supports consistent governance.

Adapting to Regulatory and Technological Change

The regulatory landscape for ai governance is always shifting. Organizations must stay current with new laws, privacy mandates, and industry standards. When regulations change, governance frameworks should be promptly updated to maintain compliance and minimize risk.

Technological advances may also introduce new risks or opportunities. Establishing a structured process for reviewing and updating policies ensures ai governance remains effective as both technology and legal requirements evolve.

Leveraging Feedback and Stakeholder Engagement

Stakeholder input is critical for refining ai governance practices. Regularly collecting feedback from users, customers, and internal teams reveals practical issues and helps identify areas for improvement. Engaging a diverse group of stakeholders promotes fairness, inclusivity, and transparency.

Case studies show that when organizations actively incorporate feedback, they often see measurable improvements in AI fairness and trust. Open communication channels make it easier to adapt governance policies in response to real-world concerns.

Reporting and Transparency

Transparent reporting is a hallmark of mature ai governance. Organizations should publish regular reports detailing AI system performance, audit results, and steps taken to address ethical or compliance issues. Public transparency builds trust with customers, partners, and regulators.

Best practices include sharing high-level findings, outlining actions taken, and making governance documentation accessible. This openness not only improves stakeholder confidence but also positions the organization as a leader in responsible AI use.

Visit https://www.ctoinput.com to learn more and to connect with a member of the CTO Input team.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com.

As you’ve seen throughout this guide, effective AI governance isn’t just about compliance—it’s about building trust, reducing risk, and unlocking real business value. If you’re ready to move from theory to action, I encourage you to take the next step. Let’s work together to assess where your technology stands, uncover gaps, and create a roadmap that aligns AI initiatives with your organization’s goals. You don’t have to navigate this complex landscape alone—we’re here to help you make technology your competitive advantage.

Schedule A Strategy Call