Did you know that over 70% of organizations now use artificial intelligence to drive business value? As adoption accelerates, concerns about AI ethics, risk, and accountability are also on the rise. The need for effective ai oversight has never been greater. It is essential to balance innovation with protection for customers, employees, and communities. This article delivers a practical roadmap for responsible ai oversight. You will learn about its foundations, core principles, actionable steps, essential tools, best practices, and emerging trends.

Understanding AI Oversight: Foundations and Importance

Defining AI Oversight

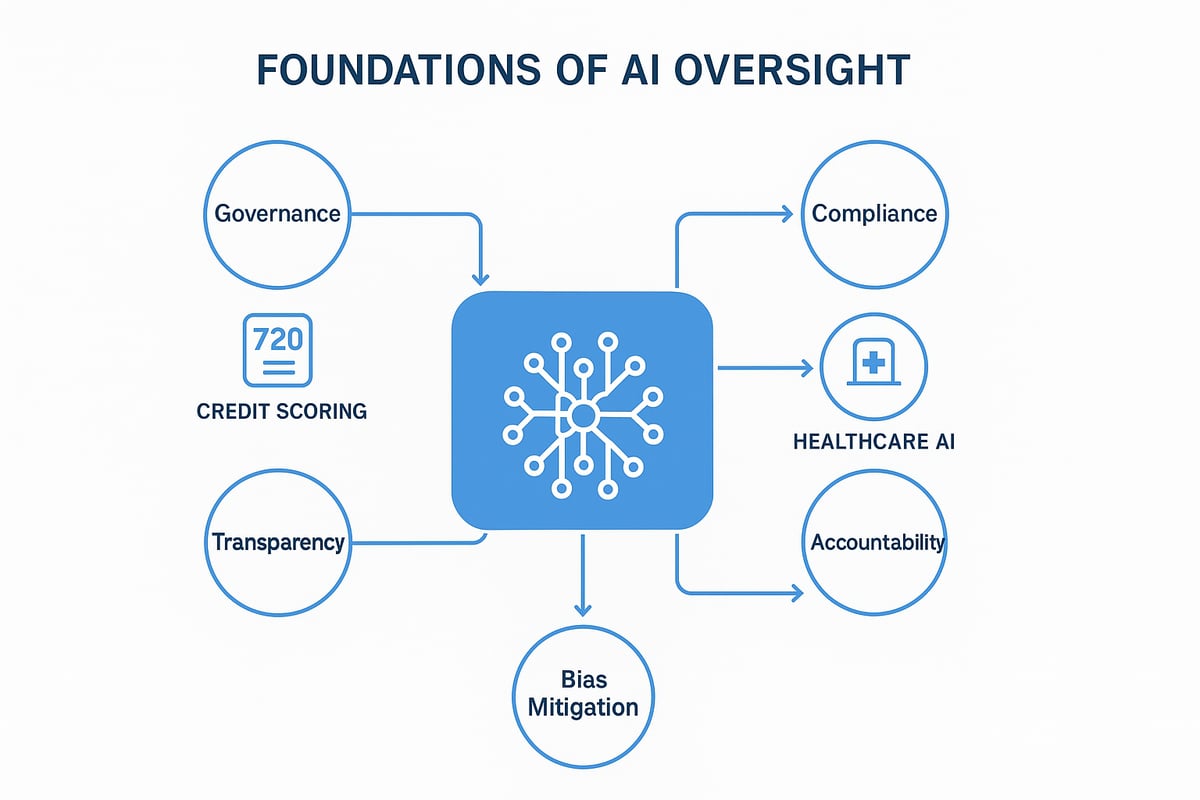

AI oversight refers to the structured processes and governance frameworks used to direct, monitor, and evaluate artificial intelligence systems within organizations. Unlike compliance, which ensures adherence to specific rules, or regulation, which is set by external authorities, ai oversight is an active, ongoing responsibility embedded in internal decision-making.

Notable examples reinforce its importance. For instance, a lack of oversight in credit scoring algorithms has led to discriminatory outcomes, while strong oversight in healthcare AI has improved patient safety. These contrasting cases illustrate why ai oversight is essential to build trust with users and the broader public.

Oversight acts as a cornerstone for responsible AI adoption, ensuring systems operate safely and ethically.

The Stakes: Risks and Opportunities

The stakes of ai oversight are high, with both significant risks and promising opportunities. Key risks include algorithmic bias, privacy breaches, lack of transparency, and operational failures that can disrupt business continuity or harm individuals. For example, biased AI hiring tools have resulted in unfair candidate selection, while privacy lapses in voice assistants have led to regulatory scrutiny.

On the opportunity side, effective ai oversight enables smarter decision-making, operational efficiency, and accelerated innovation. According to recent studies, AI-related incidents—ranging from data leaks to unintended discrimination—have led to increased regulatory actions worldwide. Sectors like finance, healthcare, and retail face the most stringent oversight requirements.

For a deeper look at managing these risks, see this guide on AI and cyber risk management, which details practical approaches for balancing innovation and compliance.

The Regulatory Landscape

The regulatory landscape for ai oversight is rapidly evolving. Global trends such as the EU AI Act and the NIST AI Risk Management Framework set new standards for responsible AI use. Organizations are now expected to not only comply with these regulations but also adopt proactive self-regulation to address emerging risks.

Oversight requirements differ by industry and geography. For example, financial services must adhere to strict audit and reporting standards, while healthcare organizations face rigorous privacy and safety mandates. Failure to implement effective ai oversight has resulted in substantial fines and reputational damage for several high-profile companies.

As regulatory bodies increase their focus on ethical AI, organizations must ensure their oversight frameworks are adaptable and comprehensive. Robust ai oversight is now a critical factor in sustaining trust and avoiding costly compliance failures.

Visit https://www.ctoinput.com to learn more and connect with a member of the CTO Input team.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com.

Core Principles of Responsible AI Oversight

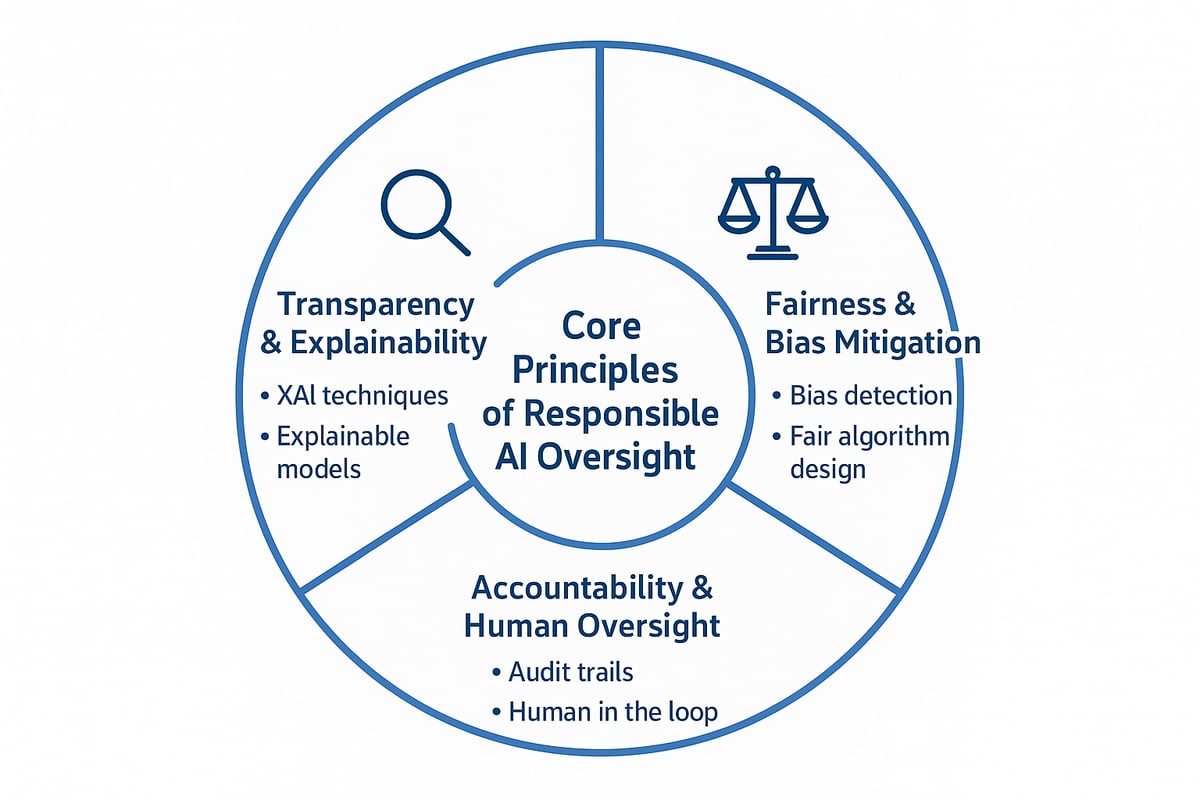

Establishing robust core principles is vital for effective ai oversight. These principles guide organizations in building trustworthy, fair, and accountable AI systems that inspire confidence among users and stakeholders.

Transparency and Explainability

Transparency is the foundation of ai oversight, ensuring that AI systems are understandable and their decisions can be traced. Without transparency, stakeholders find it difficult to trust or validate outcomes. Explainable AI (XAI) techniques, such as feature attribution, model visualization, and local interpretable model-agnostic explanations (LIME), help organizations clarify how models arrive at decisions.

Case studies in healthcare and finance show that explainability boosts user trust and regulatory approval. For instance, banks using interpretable credit scoring models report higher customer satisfaction. According to recent surveys, over 70 percent of consumers believe transparency in AI is essential for trust.

The AI Accountability Policy Report by NTIA offers valuable policy guidance on enhancing transparency, risk mitigation, and assurance, supporting effective ai oversight.

Fairness and Bias Mitigation

Ensuring fairness is a central goal of ai oversight. AI systems can unintentionally amplify biases from training data, algorithms, or deployment contexts. Common sources include skewed datasets, incomplete sampling, and unintentional feature selection. Detecting and reducing bias involves techniques like re-sampling, fairness-aware algorithms, and regular audits.

Many regulators now require organizations to demonstrate fairness in AI outcomes. Methods such as disparate impact analysis and fairness metrics are used to assess and document performance. High-profile failures, such as biased hiring algorithms or facial recognition inaccuracies, highlight the need for proactive bias mitigation in ai oversight.

Organizations that prioritize fairness not only reduce legal and reputational risks but also promote innovation and inclusivity. Ongoing evaluation and stakeholder feedback are essential for sustained progress.

Accountability and Human Oversight

Clear accountability is a cornerstone of ai oversight. It requires defined responsibilities for every stage of the AI lifecycle, from data collection to deployment. Human-in-the-loop approaches, where people review and validate critical decisions, add an extra layer of assurance. This practice is especially important in sectors like healthcare, finance, and public safety.

Robust documentation and audit trails are necessary for tracking AI decisions and ensuring compliance. Leading organizations create detailed logs of model changes, outcomes, and reviews. Best practices include regular audits, transparent reporting, and escalation procedures for handling incidents.

By embedding accountability into their culture, organizations can respond quickly to issues and demonstrate responsible stewardship of AI technologies. This commitment to oversight builds long-term trust and resilience.

Visit https://www.ctoinput.com to learn more and connect with a member of the CTO Input team.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com

Step-by-Step Roadmap to Implementing Effective AI Oversight

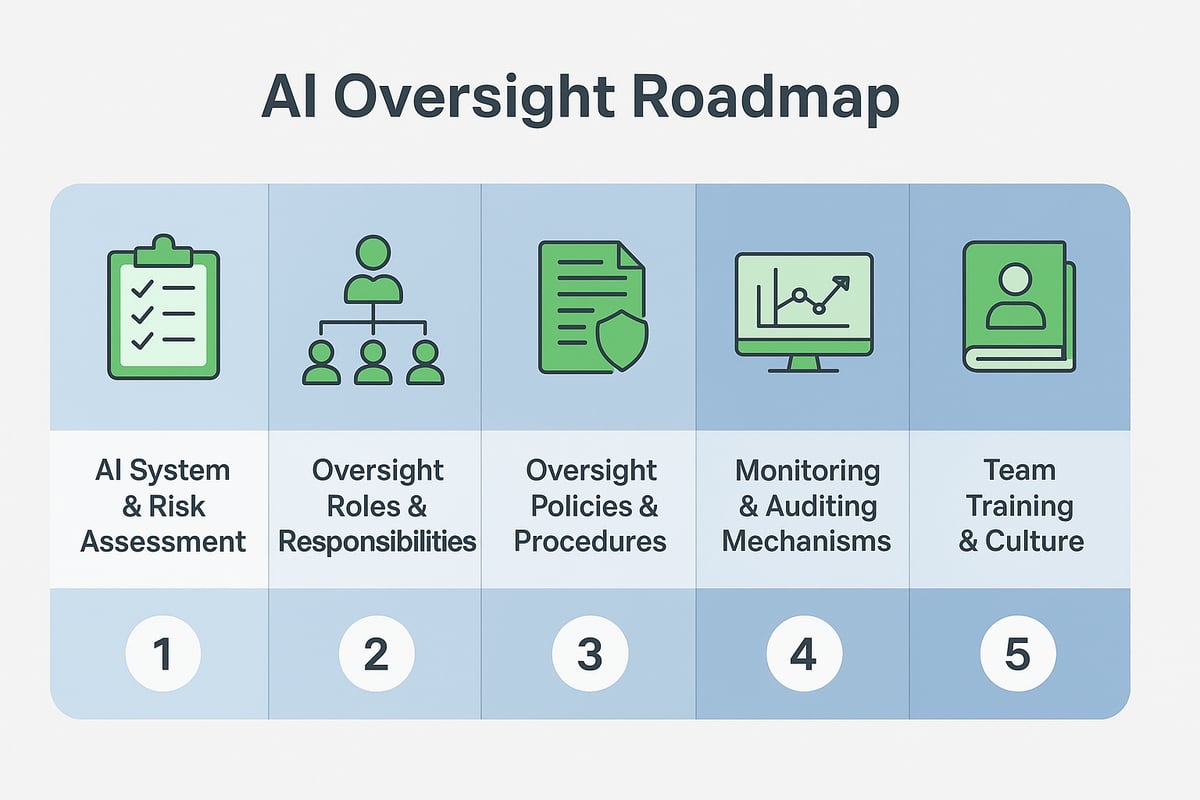

Achieving effective ai oversight requires a systematic, stepwise approach. Each step below builds on the last, ensuring oversight is embedded throughout the organization and its AI initiatives.

Step 1: Assess Current AI Systems and Risks

Begin by mapping all AI assets and use cases across the organization. This inventory should detail each system’s purpose, data sources, and user groups. Conduct a risk assessment using established frameworks, such as model cards or datasheets, to evaluate potential biases, privacy concerns, and operational impacts.

Engage stakeholders early to understand how ai oversight can address their needs and concerns. Use tools that facilitate impact analysis and risk prioritization. For organizations seeking to align oversight with broader transformation goals, reviewing an AI transformation strategy essentials guide can provide valuable context for this foundational step.

Document results in a centralized repository to ensure transparency and facilitate future reviews.

Step 2: Define Oversight Roles and Responsibilities

Effective ai oversight depends on clear roles and accountability. Assemble a cross-functional AI oversight committee, including data scientists, compliance officers, business leaders, and IT specialists.

Assign specific responsibilities for monitoring, policy enforcement, and reporting. Set up escalation procedures for issues that require higher-level attention. Visual org charts can help clarify reporting lines and decision-making authority.

Regularly review and update committee membership to reflect evolving business needs and new AI projects. This structure ensures oversight tasks are not siloed and remain actionable.

Step 3: Develop Oversight Policies and Procedures

Draft comprehensive ai oversight policies that address data governance, model development, deployment, and post-launch monitoring. Integrate these policies into the AI development lifecycle, using policy templates and checklists to support consistency.

Establish continuous monitoring protocols for model performance and compliance. Define clear procedures for managing incidents, updating models, and documenting changes.

Encourage teams to reference these policies regularly. Make oversight procedures accessible to all relevant staff, ensuring compliance is practical rather than burdensome.

Step 4: Establish Monitoring and Auditing Mechanisms

Implement automated tools to monitor AI system performance, compliance, and ethical standards in real time. Schedule regular audits, both internal and with third-party experts, to validate oversight effectiveness.

Track metrics such as model accuracy, bias indicators, and audit trail completeness. Use AI dashboards to visualize key performance indicators and flag anomalies early.

Effective ai oversight relies on robust documentation and transparent reporting. Audit logs should be maintained and reviewed routinely to support accountability and continuous improvement.

Step 5: Train Teams and Foster an Oversight Culture

Success in ai oversight hinges on ongoing education and a culture of responsibility. Develop training programs for both technical and non-technical staff, covering ethical AI use, risk awareness, and regulatory requirements.

Promote open discussion of oversight challenges and ethical dilemmas. Recognize and reward teams that demonstrate transparency and proactive risk management.

A strong oversight culture empowers employees to act as stewards of AI, ensuring organizational values and compliance standards are upheld at every stage.

Visit https://www.ctoinput.com to learn more and connect with a member of the CTO Input team.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com

Tools and Technologies for AI Oversight

Selecting the right tools and technologies is essential to effective ai oversight. Organizations must combine automated platforms, comprehensive data management, and robust reporting to build trust and transparency. Each component plays a unique role in supporting responsible ai oversight across industries.

Automated Oversight Platforms

Automated oversight platforms form the backbone of modern ai oversight. These platforms offer features like real-time bias detection, explainability modules, and audit trail management. By integrating with existing AI workflows, they allow organizations to monitor and govern models at scale.

| Feature | Open-Source Solutions | Commercial Solutions |

|---|---|---|

| Bias Detection | Yes | Yes |

| Explainability (XAI) | Partial | Advanced |

| Audit Trails | Limited | Comprehensive |

| Support & Updates | Community | Vendor/Enterprise |

In financial services, adoption of such platforms has improved compliance and reduced operational risk. Selecting the right platform depends on organizational needs and the maturity of your ai oversight processes.

Data Management and Security Tools

Ensuring secure data management is fundamental for ai oversight. Effective tools track data lineage, manage provenance, and enforce access controls, helping organizations safeguard sensitive information. Integration with existing IT systems is critical for seamless operation.

Healthcare AI projects, for example, require meticulous data governance to maintain patient privacy and regulatory compliance. Leveraging compliance and cybersecurity frameworks further strengthens ai oversight by aligning practices with industry standards and legal requirements.

Reporting and Documentation Solutions

Transparent reporting and thorough documentation are key pillars of ai oversight. Automated solutions generate compliance records, maintain audit logs, and facilitate reporting to stakeholders. These tools not only support regulatory requirements but also foster trust among partners and customers.

In manufacturing, regulatory reporting tools have streamlined oversight by providing clear audit trails and real-time dashboards. Adopting these solutions ensures your ai oversight efforts remain transparent and accountable.

To learn more and connect with a member of the CTO Input team, visit https://www.ctoinput.com.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com.

Best Practices and Common Pitfalls in AI Oversight

Proven Best Practices for Oversight Success

Successful ai oversight programs share several core practices that set them apart. Continuous improvement is crucial. Regular feedback loops allow organizations to update oversight mechanisms as AI systems evolve and new risks emerge.

Stakeholder engagement is another vital best practice. Bringing together voices from business, compliance, IT, and end users helps identify blind spots and builds trust in the oversight process. Benchmarking against industry leaders, as well as learning from cross-sector insights, enables teams to refine their strategies.

Consider the following summary table:

| Best Practice | Benefit |

|---|---|

| Continuous improvement | Adapts oversight to new risks |

| Stakeholder engagement | Builds trust and awareness |

| Benchmarking | Drives higher performance |

These best practices help ensure ai oversight supports both innovation and risk management.

Avoiding Common Oversight Mistakes

Even mature organizations can fall into common ai oversight pitfalls. Overreliance on automation without human intervention often leads to missed errors or unchecked biases. Inadequate documentation and record-keeping make it difficult to trace decisions or prove compliance during audits.

Ignoring evolving regulatory requirements is another major risk. Recent AI risk management assessments show that even leading AI companies have faced challenges due to lax or outdated oversight practices.

To avoid these mistakes, organizations should:

- Maintain thorough documentation and audit trails

- Regularly review oversight frameworks against new regulations

- Balance automation with human review and accountability

Learning from oversight failures helps teams refine their approach and prevent costly missteps.

CTO Input: Strategic Technology Alignment for Effective AI Oversight

Strategic technology alignment is foundational to robust ai oversight. When AI initiatives are closely tied to business goals and compliance needs, organizations can proactively address risks and opportunities.

Fractional CTO, CIO, or CISO leadership brings specialized oversight experience without the overhead of full-time hires. These experts help craft oversight frameworks that bridge the gap between technology and governance. Aligning AI efforts with the right governance structures, as discussed in Board technology governance best practices, ensures compliance and sustainable growth.

Ready to strengthen your ai oversight? Visit https://www.ctoinput.com to connect with a member of the CTO Input team. For further insights, spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com.

Future Trends and Evolving Challenges in AI Oversight

As technology rapidly evolves, ai oversight must adapt to address new complexities. Organizations face a shifting landscape where traditional approaches may not suffice. Looking ahead, several trends are shaping the future of responsible oversight, demanding fresh strategies and global cooperation.

The Rise of Autonomous and Adaptive AI Systems

The emergence of autonomous and adaptive AI systems brings new oversight challenges. These self-learning models can alter behaviors in real time, making traditional monitoring less effective. As a result, organizations must develop robust frameworks for continuous evaluation and intervention.

For instance, autonomous vehicles and adaptive recommendation engines require real-time risk assessments. Ongoing model validation, scenario testing, and active human oversight are essential to mitigate potential failures. Embedding ai oversight directly into the development lifecycle ensures that evolving models remain aligned with ethical standards and regulatory expectations.

Global Collaboration and Standardization

As ai oversight expands across borders, the need for international standards becomes critical. Varying regulations and compliance requirements can create confusion, especially for organizations operating globally. Industry consortia and alliances are working to harmonize best practices and establish unified frameworks.

One notable example is the Unified Control Framework for AI governance, which integrates risk management and regulatory compliance for enterprise-level oversight. Such approaches streamline reporting, facilitate cross-border cooperation, and help organizations maintain transparency while navigating complex legal landscapes.

Preparing for the Next Generation of AI Risks

The future of ai oversight will be defined by emerging threats. Deepfakes, adversarial attacks, and rapidly evolving model architectures present new risks that demand proactive strategies. Organizations must prioritize continuous education, scenario planning, and regular threat assessments.

Recent regulatory trends, such as the Transparency in Frontier Artificial Intelligence Act, emphasize the importance of risk assessment and transparency for powerful AI models. By adopting forward-looking oversight practices, businesses can stay ahead of threats and foster trust among stakeholders.

Visit https://www.ctoinput.com to learn more and connect with a member of the CTO Input team.

Spend a few minutes exploring the rest of the articles on the CTO Input blog at https://blog.ctoinput.com.

As we’ve explored, building effective AI oversight isn’t just about meeting regulations—it’s about aligning technology with your organization’s goals and ensuring your teams can innovate confidently and responsibly. If you’re ready to translate these best practices into real business outcomes, let’s have a conversation about your unique challenges and aspirations. Our seasoned fractional CTO, CIO, and CISO leaders are here to help you assess your current state, identify opportunities, and chart a path to responsible, future ready AI.

Schedule A Strategy Call to start making your technology a true competitive advantage.