You are a growth-minded CEO or founder who has said yes to one, two, maybe three AI pilots. The demos looked sharp. The vendor decks were glossy. Your team was excited.

Yet your EBITDA has not moved. The executive leadership is asking what your AI strategy is, and you do not have a clean answer. You feel the growing risk that you are burning cash on AI projects that never pay off.

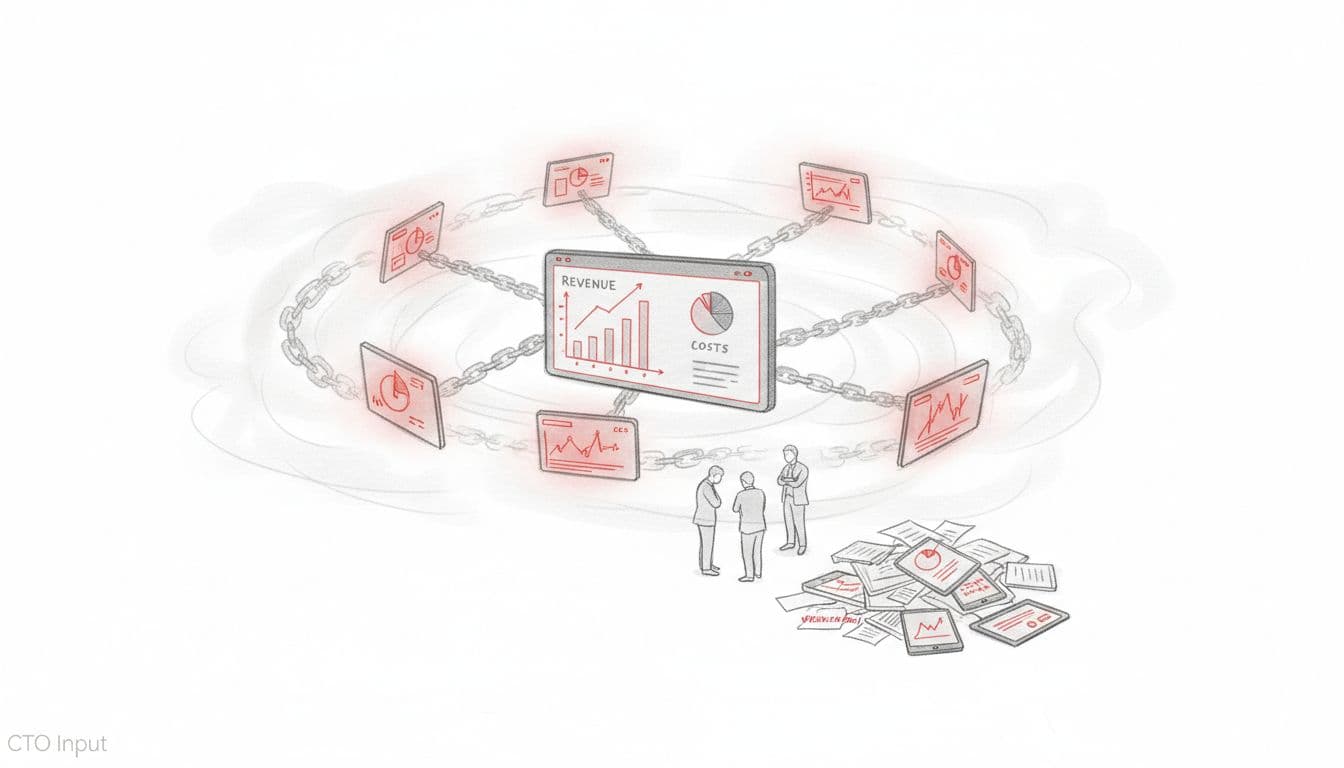

That is AI pilot purgatory. Pilots that sit in limbo, forever “promising,” never rolled out to real production or Production environments for company-wide use. The stakes are not abstract. Margins, board confidence, customer trust, and competitive position all sit on the line.

The core issue is usually not the tools. It is hidden organizational barriers: unclear ownership, weak data foundations, silos, and fear of risk. CTO Input works as a seasoned, neutral guide for mid-market companies, helping you move from scattered AI pilots to a focused Enterprise AI portfolio that actually supports your growth plan through strategic business alignment.

What AI Pilot Purgatory Really Looks Like In Your Business

AI pilot purgatory is not a theory. It shows up in your monthly reports, your project list, and your board prep.

You see busy teams, impressive Proof-of-concept (POC), long vendor meetings, and rising tech spend. What you do not see is clear business value in revenue lift, lower cost to serve, or faster cycle time, leaving no clear Return on Investment (ROI). That gap is the warning signal.

Promising AI demos, but no change in the monthly numbers

You have seen it. A sales forecasting pilot that predicts pipeline with pretty dashboards. A chatbot that answers common support questions. An operations model that flags late shipments.

In the room, everyone nods. The proof of concept looks great.

Then the next month, revenue looks the same. Cost per ticket is flat. Cycle time and error rates barely move. You start to feel like you are paying for theater.

This is common. Recent research shows that 80 to 95 percent of AI projects never deliver real business value or escape the test phase. One report on why 95% of AI pilots fail found that most generative AI efforts never touch core workflows or achieve deployment. The pilot “succeeds” in a demo, then dies quietly, stalling broader AI adoption.

Endless pilots and one-off experiments that never connect

Marketing runs a chatbot pilot. Finance tries anomaly detection for fraud or billing errors. Operations tests computer vision in the warehouse.

Each team works with its own vendor, its own data extract, and its own success story. None of these pilots share a common data view or enable cross-functional collaboration around a shared goal, blocking the path to production.

You pay for multiple tools, similar models, and repeated integration work. Leaders start to lobby for their favorite pilot. “Our use case should get the budget.” The politics heat up.

You are left in the middle. You have to pick winners and losers without a clear way to compare them or scale any to full production environments.

Vendors, buzzwords, and rising board pressure

Vendors promise magic. Boards ask, “What is our enterprise AI strategy?” Regulators and large partners begin adding AI and cyber questions into audits and reviews.

Your leadership team feels squeezed between hype and risk. Without a trusted senior technology or security leader at the table, AI decisions drift toward the loudest vendor or the most excited internal champion.

The natural question follows: if the tools are not the main issue, what is?

The Real Reasons Your AI Pilot Purgatory Has Called You To Stall Before They Help You Scale

The pattern is not random. A small set of organizational blockers keep AI stuck in pilot purgatory before Scaling AI. These are decision problems, not exotic technical puzzles.

AI pilots are not tied to a clear business problem or owner

Many pilots start because someone is curious about what a tool can do. A head of marketing wants to “try GPT.” A head of ops wants to “try computer vision.”

There is no shared agreement on the specific business pain to solve. No one writes down the metric that should move, like days in cash, first-contact resolution, error rate, or order accuracy. There is no single accountable business owner backed by executive leadership for the outcome.

When budgets tighten, these projects are easy to cut. They are “nice to have.”

In healthy programs, every AI pilot starts with one or two target metrics and a named business owner. That person signs off on success criteria before any code gets written.

Your data and systems are not ready for real-world AI use

On paper, you have lots of data. In practice, it is scattered across CRM, ERP, email, spreadsheets, and vendor portals. Poor data quality means definitions do not match. History is missing. Feeds break. Manual work fills the gaps.

Pilots often hide this problem. A data scientist pulls a clean sample, fixes issues by hand, and shows strong results in a proof-of-concept (POC). The moment you try to plug the model into live systems, the fragility shows up due to weak data governance.

You may also hit infrastructure gaps amid core technical challenges. Legacy systems without APIs. No simple way to automate handoffs. No monitoring to see when a model drifts or breaks in production without proper MLOps practices. As some guides on scaling AI from pilot to production point out, this “hidden plumbing” is where many companies stall. Strong data governance and MLOps practices are essential to address data quality issues for real-world AI use.

Siloed teams and weak communication between business, IT, and data

Your AI work might sit with a small analytics or data science team. They speak in models, features, and accuracy.

Operations talks in throughput and on-time shipments. Sales talks in quota, conversion, and win rate. IT talks in uptime, tickets, and security.

Without a shared plan, these groups talk past each other.

Picture a sales forecasting model that is 92 percent accurate in a lab spreadsheet. IT is nervous about support. Sales leaders never see it in the CRM or weekly pipeline review. No one changes behavior. The pilot is “done,” but nothing in the field changes.

Risk, compliance, and trust concerns stop AI at the last mile

Legal, compliance, and security teams often show up at the end of a project. They are handed a nearly finished pilot and are asked to “sign off.”

They raise fair questions. Where did the training data come from? Can we explain decisions if a regulator asks? How do we handle bias or privacy? What if the vendor has a breach?

Because there is no simple AI governance playbook, leaders default to caution. The project stays in “pilot” mode forever so the perceived risk feels lower.

Front-line staff also hesitate. If they were not part of the design, they do not trust the model. They build their own workarounds or ignore AI suggestions.

As one article on why AI projects get stuck in pilot purgatory notes, trust is often the real blocker, not accuracy.

No one owns the roadmap from pilot to production

Building the first model is one kind of work. Turning it into a reliable, audited, supported system in production through deployment is a different job.

You need:

- Process changes

- User training

- Monitoring and alerts

- Ongoing budget and support

Many mid-market companies lack a senior technology leader who can connect business strategy, AI opportunity, cyber risk, and change management. The result is predictable. Promising pilots sit in a corner slide deck with no path to rollout in production.

A Simple Path Out of AI Pilot Purgatory: Decisions You Can Make This Quarter

You do not need a massive AI program. To escape AI pilot purgatory through scaling AI, you need a small number of clear moves in the next 90 days.

CTO Input often helps leadership teams design and sequence these decisions so AI supports, not distracts from, the growth plan.

Pick two AI use cases that clearly connect to your P&L

Stop funding ten side projects. Pick one or two enterprise AI use cases that move real money and deliver a strong return on investment (ROI).

Good examples:

- Reduce churn in your highest-value customer segment

- Speed quote-to-cash by cutting manual touches

- Lower support cost per ticket while keeping CSAT high

Use a simple filter: Is the data available, is the process stable enough, and can we measure before and after? If the answer is no, it is not a good candidate for the next quarter.

Assign real owners and success metrics before you fund the next pilot

Set a basic rule. No AI pilot without:

- A named business owner

- A named technology partner

- Two or three agreed metrics for clear business outcomes

Bring risk and compliance into the design, not just the go-live meeting. This lets you compare pilots side by side and decide which to scale, which to adjust, and which to stop.

The tone with your team can be firm but supportive. AI work is welcome, but it has to tie to real business outcomes while ensuring strategic business alignment.

Invest in the minimum data, process, and change work needed to scale

You do not always need a full platform rebuild to get out of AI pilot purgatory.

Often you need a handful of focused moves to scale enterprise AI:

- Clean and connect the few data sources that feed your chosen use cases with strong data governance and robust data pipelines

- Automate one or two key handoffs so the model can run in real time

- Train the people who will live with the new workflow to drive end-user adoption, backed by solid data governance

Think in 90-day steps. Take one pilot, make it production-grade in a limited area, prove impact in production, incorporate change management to move it into production, then expand. A fractional CTO or CIO can help design this path without adding a full-time executive role.

Get a neutral guide to align AI, cyber risk, and your growth plan

You do not need another tool. You need a guide who sits on your side of the table.

CTO Input serves growth-minded CEOs, COOs, and founders who want AI, cloud, and cybersecurity to support a clear growth plan. An external, neutral advisor can help you:

- Sort and rank AI projects by impact and feasibility

- Assess data readiness and risk in plain language

- Build a simple roadmap from pilot to production that fits your budget and drives successful AI adoption

A low-friction next step could be a short diagnostic or strategy call to review your current pilots, prioritize AI projects, and decide which one should scale first.

Conclusion Escaping AI Pilot Purgatory

AI pilot purgatory is rarely a technical challenge. It is usually an organizational problem rooted in organizational culture, specifically focus, ownership, data readiness, and risk comfort.

You do not have to fix everything at once. You simply need to move one or two high-value AI pilots into production, prove their impact in production, and use that as the new standard for future production work. That is how you rebuild confidence with your board and your team. Technical challenges are seldom the primary blocker.

Picture a near future where AI shows up in cleaner numbers from successful scaling AI, fewer surprises, and faster execution through successful AI adoption. Projects are smaller, better aimed, and easier to explain. Executive leadership conversations shift from “What are we trying?” to “What is working and how do we scale it?” Executive leadership drives this essential change.

If you want a seasoned partner in that shift, including the internal upskilling your teams need to manage technology strategy, visit https://www.ctoinput.com to see how fractional CTO, CIO, and CISO leadership supports enterprise AI for mid-market companies. For more practical guidance on AI, cyber risk, enterprise AI, and technology strategy, explore the CTO Input blog at https://blog.ctoinput.com.