That late-night alert isn't just a technical problem. It’s the start of a frantic, middle-of-the-night scramble that pulls executives into chaotic calls and ends with fumbled answers to your board and insurers. You keep paying for new security tools, but the mess stays the same.

This is the expensive reality for leaders who mistake having a document for being prepared. The real cost of a breach isn't the technical fix. It's the operational meltdown, the erosion of customer trust, and the painful loss of control when you can least afford it. The goal of incident response planning isn't to create a binder. It’s to restore order and protect what matters.

The Real Problem: Your Operating System Is Built for Peacetime

You have smart people. You have expensive tools. You might even have a document somewhere titled “Incident Response Plan.” So why does a minor alert still feel like a five-alarm fire?

The chaos isn't happening because of a lack of effort. It's a failure of the underlying operating system that governs how your people make decisions under pressure. Your biggest vulnerability isn't in your firewall. It’s in the ambiguous, high-friction handoffs between your technical, legal, and communications teams. When an incident hits, your peacetime system grinds to a halt.

Smart People Fail in Ambiguous Systems

When a crisis hits, the disconnect between departments becomes glaringly obvious. The technical team is heads-down, focused on containing the threat, but every move they make has legal and reputational ripple effects. Meanwhile, the legal and comms teams are starved for clear information to manage the fallout, but all they get is technical jargon they can't translate.

This friction creates dangerous delays. Key decisions stall while teams get stuck debating who has the authority to make a call. The result is a slow, disjointed response that dramatically increases the blast radius of the incident and burns through customer trust.

A Scenario Where Implied Ownership Fails

Picture a fast-growing SaaS company that thought it was ready. A security alert came in from a third-party vendor late on a Friday. The on-call engineering team saw it, but they hesitated. Should they shut down the integration? It would kill a key feature for their biggest enterprise customers.

They tried to reach their manager, who was unavailable. Escalating directly to the CTO felt like overstepping. Over in marketing, the comms lead heard whispers but had no concrete details to draft a statement. For four critical hours, everyone assumed someone else owned the final decision.

By the time the leadership team was finally pulled together, the minor vendor issue had snowballed into a major service outage affecting 30% of their customer base. The root cause wasn't a technical failure but an organizational one: no one had the explicit authority to make a tough call quickly. This is what happens when decision rights are implied, not explicit.

The Decision: Pre-Approve Who Decides What, and When

Your most important choice is made long before a crisis arrives: deciding who gets to decide what, and when. Any confusion here doesn't just slow you down. It multiplies your risk. Who has the final say to declare a full-blown incident? Who can make the gut-wrenching call to pull a revenue-generating system offline? Who signs off on the precise wording going out to customers?

If those answers are fuzzy, you’ve already set your team up to fail. Good incident response planning is an exercise in pre-delegating authority. You’re making the tough calls now, while things are calm, so your team can act with clarity and confidence when everything is on fire.

This isn't a technical problem. It’s a governance failure. It's what happens when delegated authority is assumed instead of explicitly assigned. The fix is to make your governance inspectable. For the board, this translates abstract risk management into concrete proof of oversight. They need to see that decision rights are clear, risk appetite is defined, and a system exists to prove it works.

A Framework for Clear Decision Rights

To kill the ambiguity, map out decision rights against specific triggers. This creates a clear line from a potential event to a single, accountable owner and a pre-defined escalation path. For your top three incident scenarios, name the owner for these critical calls:

- Incident Declaration: Who has the authority to officially kick off the response plan and assemble the core team?

- System Isolation: Who can approve taking a critical system offline, knowing it will cause operational pain but will reduce the blast radius?

- External Communication: Who signs off on the final public statements to customers, partners, and regulators?

- Resource Allocation: Who is empowered to approve emergency spending for outside experts or new tools on the spot?

These roles aren't just for tech leads. As we cover in our guide to incident command structures, the owners are often a blend of technical, legal, and operational leaders. Naming them now transforms your plan from a document you hope works into a reliable system for making fast, smart, and defensible decisions. This is how you take back control.

The Plan: A 30-Day Move to Restore Control

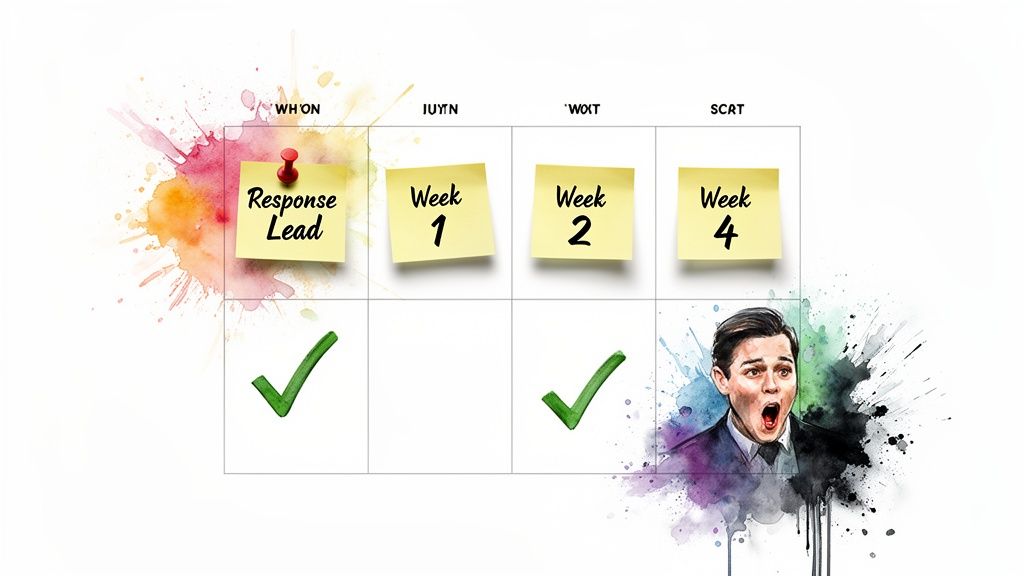

Enough theory. This is your 30-day plan to establish a calm, controlled rhythm for incident response. The goal isn't a perfect, 100-page document. It's to cut through ambiguity and build real momentum, fast. The objective is simple: end the month with a working kickoff runbook for your top three incidents, a named response leader, and a weekly review cadence.

Week 1: Name the Owner and Define the Outcome

Your first move is to name one single Incident Response Lead. This is not a committee. It's an individual with the authority to pull the right team together. Their first task is to define the 30-day outcome: a simple, one-page "kickoff runbook" for your top three most likely incidents, like ransomware, a major cloud outage, or a business email compromise.

Week 2: Map the Handoffs and Define Done

The Incident Response Lead maps the critical handoffs. Who on the technical team feeds information to the communications lead? Who gets the final go-ahead from legal before a customer notification is sent? Next, define "done" for the kickoff phase: the core team is assembled, the incident severity is classified, and executives are notified, all within 60 minutes of declaration. This becomes your first inspectable metric. Drawing on established frameworks like these Incident Response Best Practices for SREs can provide a solid foundation.

Week 3: Remove One Blocker and Ship One Visible Fix

The Incident Response Lead's job is to find and eliminate one major blocker slowing down your response. It could be an outdated on-call list or confusing access permissions for security tools. The "fix" is to run a 90-minute tabletop exercise. Get the core team in a room and walk through one of the new kickoff runbooks. This simulation will immediately expose friction points. A tested plan is a critical layer of defense, as we cover in our guide on how to prevent data breaches.

Week 4: Start the Weekly Cadence and Publish a One-Page Proof Snapshot

Finally, establish an operational rhythm. Your Incident Response Lead starts a 30-minute weekly meeting to review alerts, update runbooks, and track readiness. To close out the month, the owner publishes a one-page "proof snapshot" for leadership. It shows the named owners, the kickoff runbooks, and the time-to-assemble metric from the exercise. This is inspectable proof that you are building control.

Proof: What to Track to Show Your Plan Is Real

A plan on a shared drive is just hope. Your board, auditors, and insurers need proof that your team can execute that plan under fire. This is how you move beyond compliance theater and into a state of verifiable readiness. Inspectable proof translates technical activity into the language of governance: decision rights, delegated authority, and risk management.

From Abstract Plans to Concrete Signals

To build this proof, track a few critical signals that reveal the health of your response capability.

Start by focusing on these three measurable signals:

- Time to Assemble (TTA): How long does it take to get the core decision-making team on a call after an incident is declared? Your target should be under 15 minutes. A high TTA signals chaos in your on-call schedules.

- Time to Triage (TTT): Once assembled, how quickly can the team classify the incident's severity and potential business impact? This should happen within 30 minutes. A slow TTT means your team lacks clear classification criteria.

- Playbook Adherence Rate: During tabletop exercises, what percentage of critical steps from the playbook did the team actually follow? Aim for 90% or higher. This tells you if your plans are practical tools or just theory.

Proof isn't a report written weeks after the dust settles. It's the real-time evidence of your team’s ability to make clean decisions under pressure. It's the meeting logs and decision records that demonstrate a controlled, repeatable process.

Building Your Board-Ready Proof Pack

Think of your proof pack as a living collection of evidence, updated after every test and real-world incident. The goal is a simple, one-page dashboard any executive or board member can understand in seconds. You can get a baseline by conducting an incident response readiness assessment.

Your dashboard snapshot should include:

- The date of the last tabletop exercise for each critical incident type.

- Your current TTA and TTT metrics from the most recent test.

- A list of the named owners for key decision-making roles.

- The date the core communication plan was last updated.

Publishing this proof forces accountability. It turns readiness from a project into a continuous operational practice. This is the evidence that closes the gap between having a plan and being a resilient organization.

Closing the gap between chaos and control doesn't require a massive project. It requires a decision to install a real system for handling pressure. By making ownership explicit and defining decision rights before something goes wrong, you build the guardrails that keep your organization safe and allow your teams to execute with confidence.

CTO Input helps leaders restore clear ownership and reliable execution. We install the operating system that reduces coordination tax and risk at the same time.

Ready to replace frantic fire drills with a calm, inspectable system? Book a clarity call today to outline your 30-day plan to restore control.